- Fairness: are the outputs of our algorithms fair towards everyone? Is it possible that they discriminate against people based on characteristics such as gender, race, and sexual orientation?

- Are developers responsible for potential bad usages of their algorithms?

- Accountability: who is responsible for the output of the algorithm?

- Interpretability and transparency: in sensitive applications, can we get an explanation for the algorithm’s decision?

- Are we aware of human biases found in our training data? Can we reduce them?

- What should we do to use user data cautiously and respect user privacy?

To demonstrate the importance of ethics in machine learning, it is now taught in classes, it has a growing community of researchers working on it, dedicated workshops and tutorials, and a Google team entirely devoted to it.

We are going to look into several examples.

To train or not to train? That is the question

Machine learning has evolved dramatically over the past few years, and together with the availability of data, it’s possible to do many things more accurately than before. But prior to considering implementation details, we need to pause for a second and ask ourselves: so, we can develop this model - but should we do it?

The models we develop can have bad implications. Assuming that none of my readers is a villain, let’s think in terms of “the road to hell is paved with good intentions”. How can (sometimes seemingly innocent) ML models be used for bad purposes?

The models we develop can have bad implications. Assuming that none of my readers is a villain, let’s think in terms of “the road to hell is paved with good intentions”. How can (sometimes seemingly innocent) ML models be used for bad purposes?

- A model that detects sexual orientation can be used to out people against their will

- A model to detect criminality using face images can put innocent people behind bars

- A model that creates fake videos can be used as “evidence” for fake news

- A text generation model can be used to generate fake (positive or negative) reviews

In some cases, the answer is obvious (do we really want to determine that someone is a potential criminal based on their looks?). In other cases, it’s not straightforward to weigh all the potential malicious usages of our algorithm against the good purposes it can serve. In any case, it’s worth asking ourselves this question before we start coding.

|

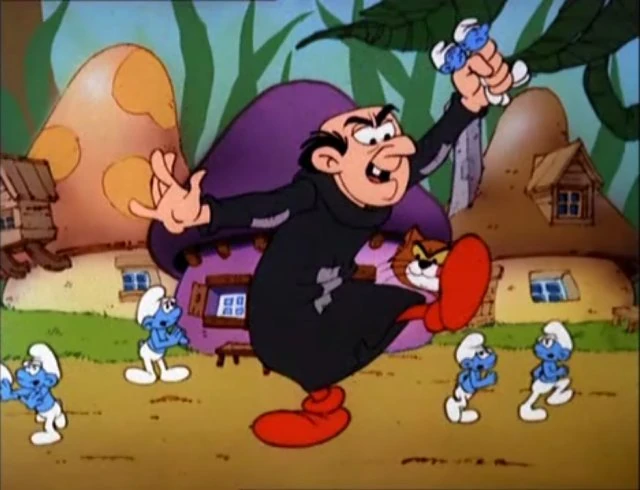

| Would you develop a model that can recognize smurfs if you knew it could be used by Gargamel? (Image source) |

Underrepresented groups in the data

So our model passed the “should we train it” phase and now it’s time to gather some data! What can go wrong in this phase?In the previous post we saw some examples for seemingly solved tasks whose models work well only for certain populations. Speech recognition works well for white males with an American accent but less so for other populations. Text analysis tools don’t recognize African-American English as English. Face Recognition works well for white men but far less accurately for dark-skinned women. In 2015, Google Photos automatically labelled pictures of black people as “gorillas”.

The common root of the problem in all these examples is insufficient representation of certain groups in the training data: not enough speech by females, blacks, and people with non-American English accent. Text analysis tools are often trained on news texts, which means it’s mostly written by adult white males. Finally, not enough facial images of black people. If you think about it, it’s not surprising. This goes all the way back to the photographic film, which had problems rendering dark skins. I don’t actually think there were bad intentions behind any of these, just maybe - ignorance? We are all guilty of being self-centered, so we develop models to work well for people like us. In the case of software industry, this mostly means “work well for white males”.

Biased supervision

When we train a model using supervised learning, we train it to perform similarly to humans. Unfortunately, it comes with all the disadvantages of humans, and we often train models to mimic human biases.Let’s start with the classic example. Say that we would like to automate the process of mortgage applications, i.e. training a classifier to decide whether or not someone is eligible for a mortgage. The classifier is trained using the previous mortgage applications with their human-made decisions (accepted/rejected) as gold labels. It’s important to note that we don’t exactly train a classifier to accurately predict an individual’s ability to pay back the loan; instead we train a classifier to predict what a human would decide when being presented with the application.

We already know that humans have implicit biases and that sensitive attributes such as race and gender may affect these decisions negatively. For example, in the US, black people are less likely to get a mortgage. Since we don’t want our classifier to learn this bad practice (i.e. rejecting a mortgage merely because the applicant is black), we leave out those sensitive attributes from our feature vectors. The model has no access to these attributes.

Only that analyzing the classifier predictions with respect to the sensitive attributes may yield surprising results; for example, that black people are less likely than white people to be eligible for a mortgage. The model is biased towards black people. How could this happen?

Apparently, the classifier gets access to the excluded sensitive attributes through included attributes which are correlated with them. For example, if we provided the applicant’s address, it may indicate their race. (In the US, zip code it is highly correlated with race). Things can get even more complicated when using deep learning algorithms on texts. We no longer have control on the features the classifier learns. Let’s say that the classifier now gets as input a textual mortgage application. Now it may be able to detect race through writing style and word choice. And this time we can’t even remove certain suspicious features from the classifier.

Adversarial Removal

What can we do? We can try to actively remove anything that indicates race.We have a model that gets as input a mortgage application (X), learns to represent it as f(X) (f encodes the application text, or extracts discrete features), and predicts a decision (Y) - accept or reject. We would like to remove information about some sensitive feature Z, in our case race, from the intermediate representation f(X).

This can be done by jointly training a second classifier, an “adversarial” classifier, which tries to predict race (Z) from the representation f(X). The adversary’s goal is to predict race successfully, while at the same time, the main classifier aims both to predict the decision (Y) with high accuracy, and to fail the adversary. To fail the adversary, the main classifier has to learn a representation function f which does not include any signal pertaining to Z.

The idea of removing features from the representation using adversarial training was presented in this paper. Later, this paper used the same technique to remove sensitive features. Finally, this paper experimented with textual input, and found that demographic information of the authors is indeed encoded in the latent representation. Although they managed to “fail” the adversary (as the architecture requires), they found that training a post-hoc classifier on the encoded texts still managed to detect race somewhat successfully. They concluded that adversarial training isn’t reliable for completely removing sensitive features from the representation.

Biased input representations

We’re living in an amazing time with positive societal changes. I’ll focus on one example that I personally relate to: gender equality. Every once in a while, my father emails me an article about some successful woman (CEO/professor/entrepreneur/etc.). He is genuinely happy to see more women in these jobs because he remembers a time when there were almost none. For me, I wish for times that this would be a non-issue - when my knowledge that women can do these jobs and the number of women actually doing these jobs finally make sense together.

In the Ethics in NLP workshop at EACL 2017, Joanna Bryson distinguished between 3 related terms: bias is knowing what "doctor" means, including that more doctors are male than female (if someone tells me they’re going to the doctor, I normally imagine they’re going to see a male doctor). Stereotype is thinking that doctors should be males (and consequently, that women are unfit to be doctors). Finally, prejudice is if you only use (go to / hire) male doctors. The thing is, while we as humans--or at least some of us--can distinguish between the three, algorithms can’t tell the difference.

One of the points of failure in this lack of algorithmic ability to tell bias from stereotype is in word embeddings. We discussed in a previous post this paper which showed that word embeddings capture gender stereotypes. They showed that for instance, when using embeddings to solve analogy problems (a toy problem which is often used to evaluate the quality of word embeddings), they may suggest that father is to doctor like mother is to nurse, and that man to computer programmer is like woman to homemaker. This obviously happens because statistically there are more nurse and homemaker females and male doctors and computer programmers than vice versa, which is reflected in the training data.

| ||

|

However, we treat word embeddings as representing meaning. By doing so, we engrave “male” into the meaning of “doctor” and “female” into the meaning of “nurse”. These embeddings are then commonly used in applications, which might inadvertently amplify these unwanted stereotypes.

The suggested solution in that paper was to “debias” the embeddings, i.e. trying to remove the bias from the embeddings. The problem with this approach is, first, that you can only remove biases that you are aware of. Second, which I find worse, is that it removes some of the characteristics of a concept. As opposed to the removal of sensitive features from classification models, in which the features we try to remove (e.g. race) have nothing to contribute to the classification, here we are removing an important part of a word’s meaning. We still want to know that most doctors are men, we just don’t want to have a meaning representation in which woman and doctor are incompatible concepts.

The sad and trivial take-home message is that algorithms only do what we tell them too, so “racist algorithms” (e.g. the Microsoft chatbot) are only racist because they learned it from people. If we want machine learning to help build a better reality, we need to research not just techniques for improved learning, but also ways to teach algorithms what not to learn from us.